As many of you know, I got a 3D printer for Father’s Day this year. Originally, I got the Bambu Lab A1 Mini combo with the AMS Lite. Since then, I realized I needed a bit more build space, so I sold off the A1 Mini and upgraded to the full-size A1. The seemingly small move from a 1803 build volume to a 2563 build volume sounds small, but it’s a total game changer.

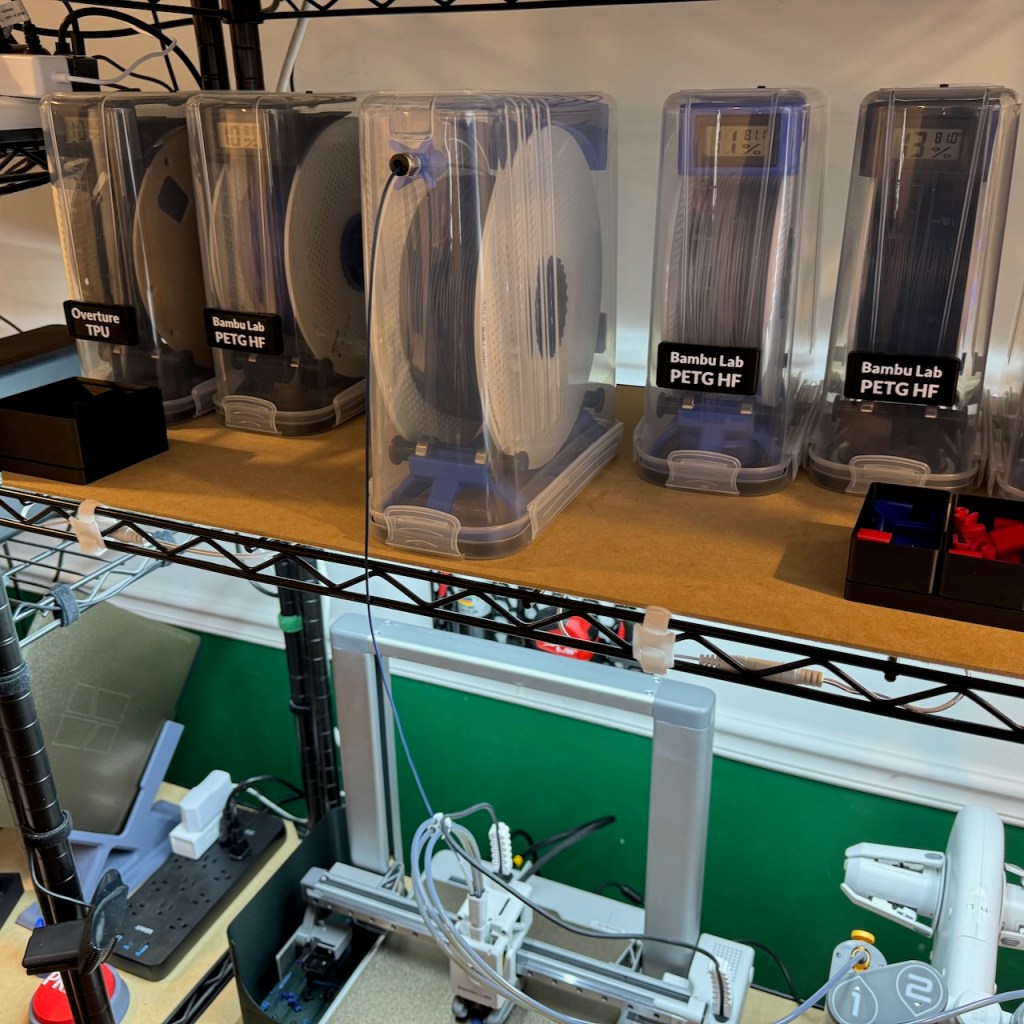

I’ve made a handful of outdoor projects, mainly centered around our fire pit area. Since the stuff lives outdoors, I can’t really make it out of PLA, like most of the stuff I make. Given the lack of enclosed space on the A1 family, I can’t print in materials like ABS or ASA, so that leaves PETG. I gave my wife her choice of colors, and she originally landed on the Bambu Lab PETG-CF in Indigo Blue. It’s a great looking filament, and while at $34.99 a roll, it’s nearly double what I spend for most of the PLA I run, it’s not crazy, so that’s fine. Bambu Lab says PETG-CF is “NOT Ideal” for use in the AMS Lite, so I direct-feed it into the extruder from above, coming from a homemade dry box. Using the dry box enables the filament to (get this…) stay dry, which is great for materials that are very hygroscopic, like TPU, certain flavors of PETG, or the wood-filled PLA I’ve been playing around with.

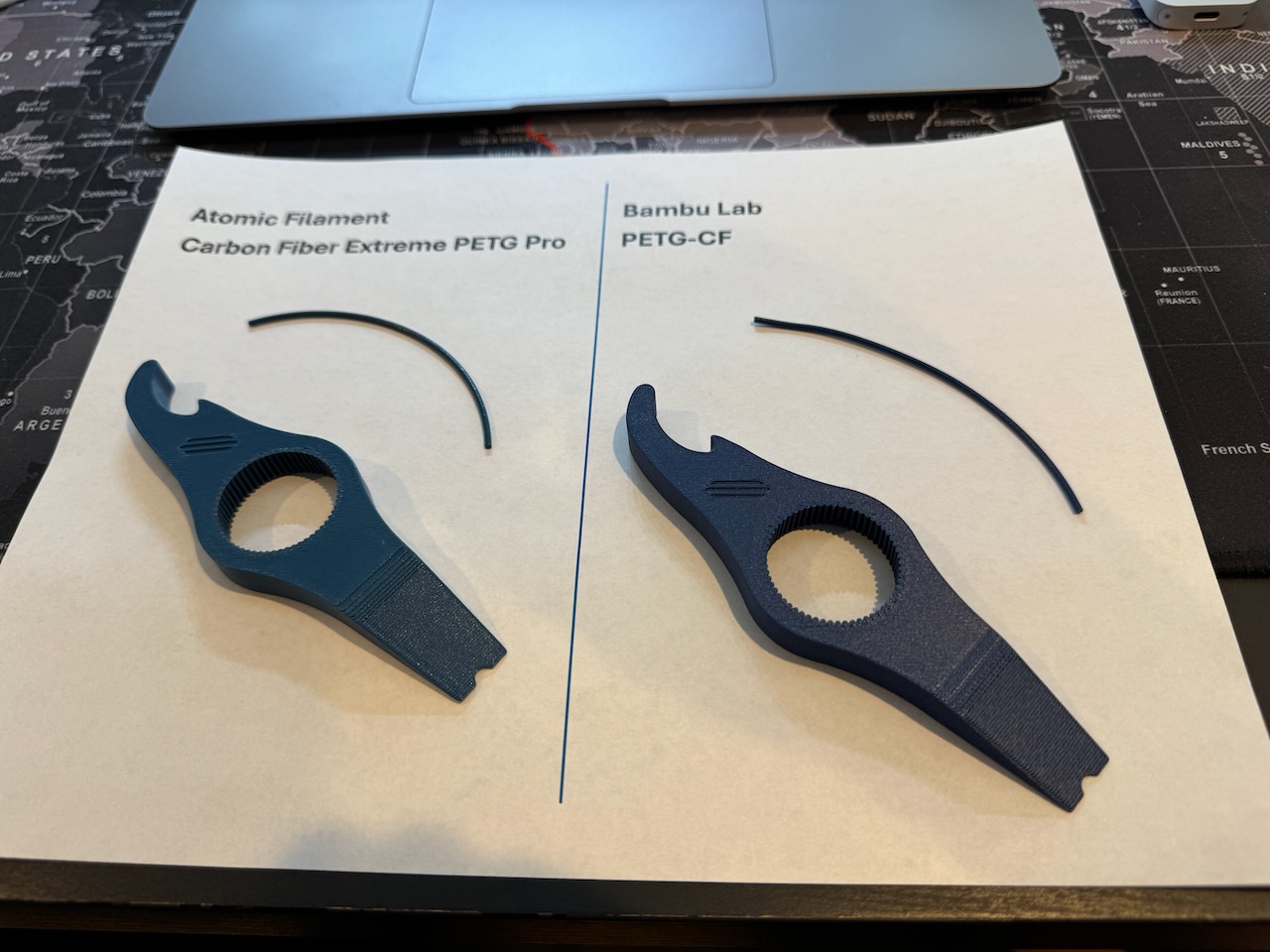

Having seen many folks absolutely rave about Atomic Filaments, I figured I’d give them a shot. I picked up a 1 kilo spool of their Smoke Blue Carbon Fiber PETG Pro, which is a super close match to Bambu’s Indigo Blue PETG-CF. Since I’m starting to run a bit low on the Bambu stuff, I figured I’d go with the near-match on color. The Atomic filament was priced at $49.99, which I paid for myself, same as the Bambu Lab filament. I decided as part of this review to do a head-to-head comparison of the products as well, though not a really exhaustive one.

Testing Methodology

My test plan isn’t terribly complex. My main goal is to see if the $15 delta between the two products is worth it (to me). Here we go…

I started off by emailing Atomic’s support to find out if they had any guidance for creating a custom filament profile in Bambu Studio. Their advice was pretty straightforward. Model off the Bambu profile and change a couple of parameters – nozzle temp at 270C and bed temp at 77c. Easy enough!

- Dry each filament in my Creality filament dryer for 8 hours at 60C/15% RH.

- Place each roll in a dry box and let them settle down overnight. Make sure conditions inside are comparable.

- Print this bottle opener.

- 0.4mm nozzle

- 0.2mm layer height

- 6 walls

- 15% Cross-hatch infill

- Textured PEI plate, cleaned before printing with 99% Isopropyl Alcohol

- Print on different, but adjacent spots on the plate

- Compare the results.

The best laid plans, right? Every test plan hits some sort of snag, naturally. Mine was the fact that Atomic’s spool was just too wide to be used inside my dry boxes. As a work-around, I ended up respooling it onto an empty Sunlu spool I has laying around. Bonus – I got to test the respooling tool I recently printed! It took about 10 minutes using a drill to drive the filament.

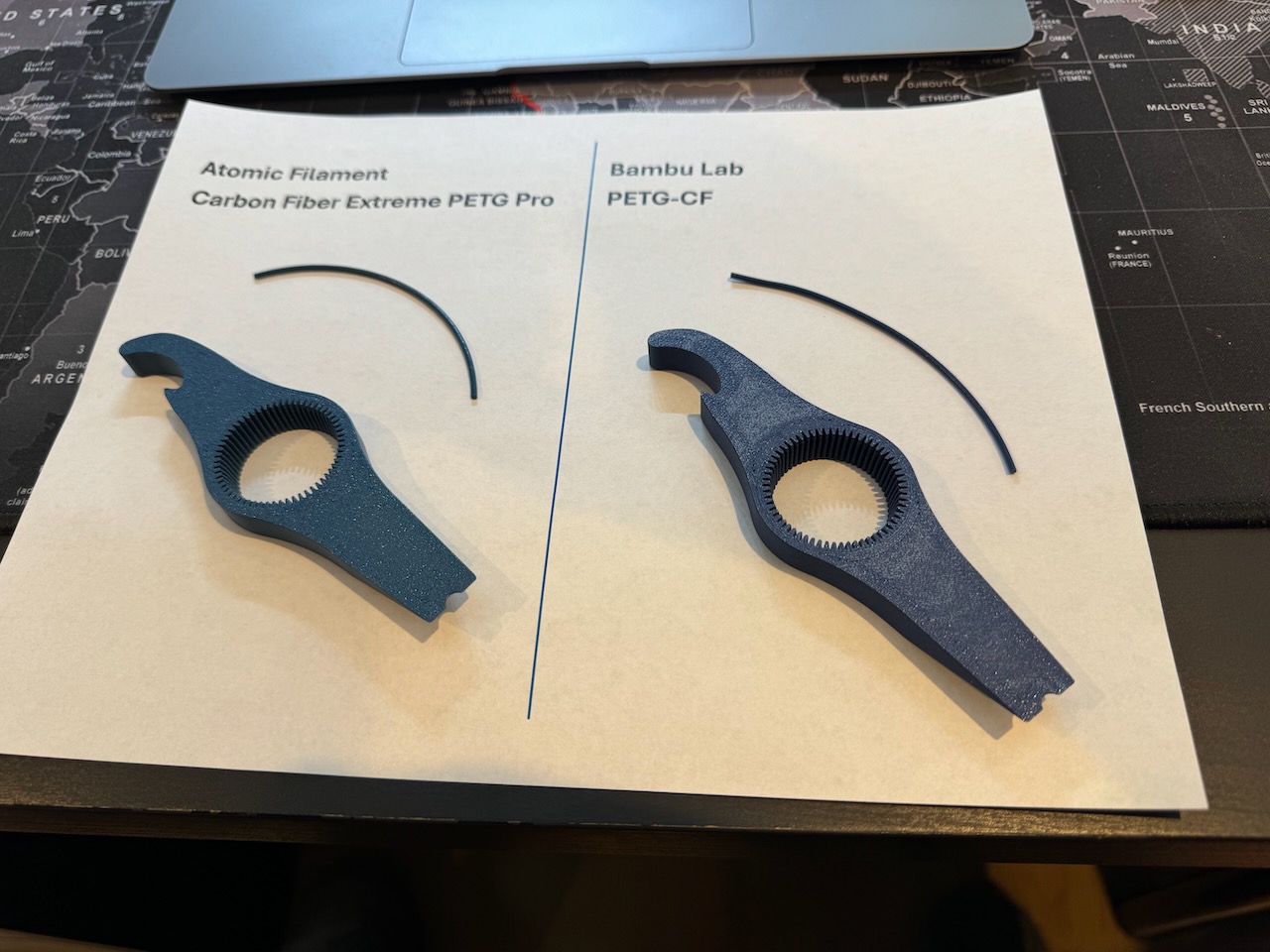

Pre- and Post-Print Filament Appearance

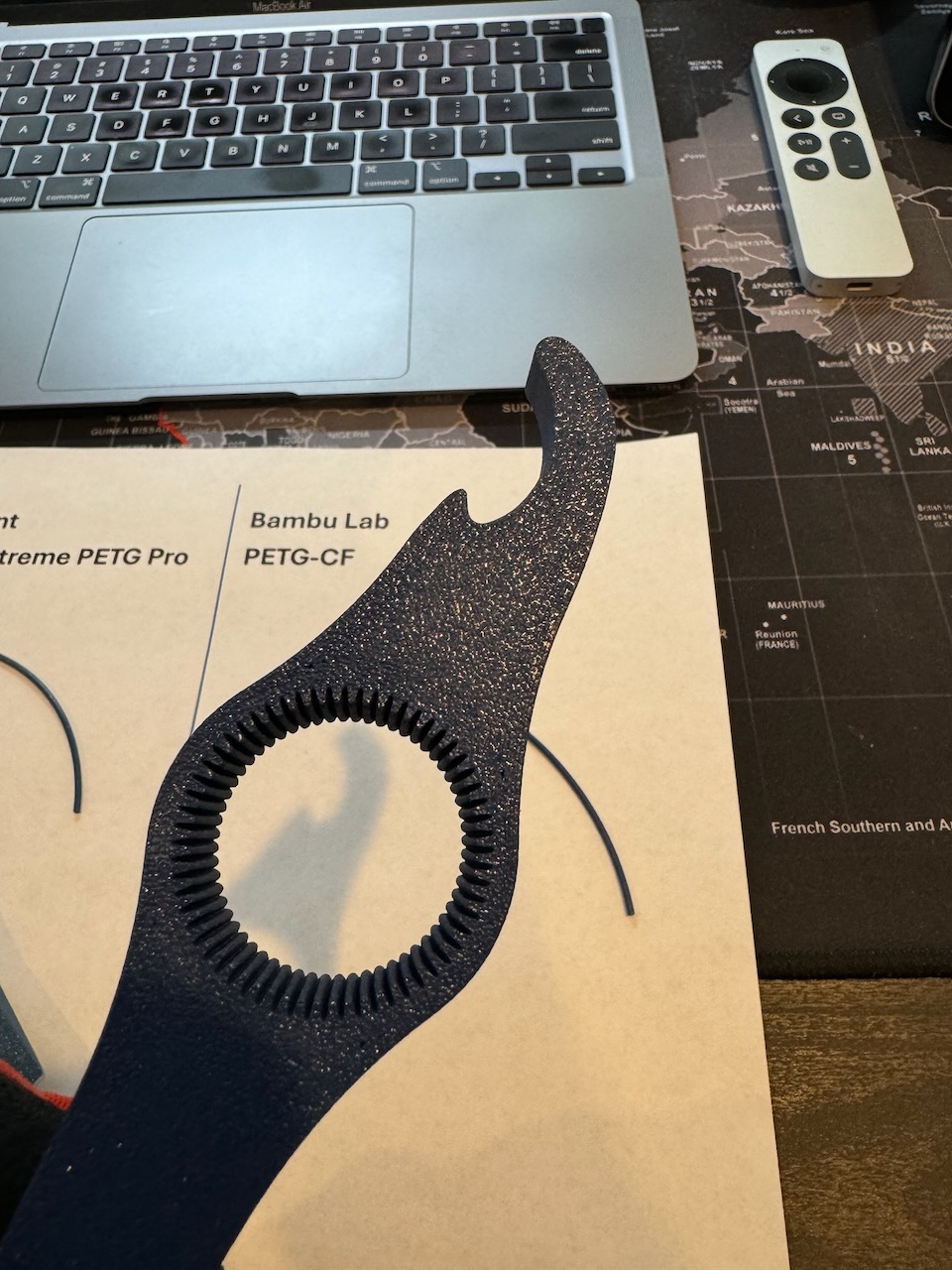

Here you’ll see some pics showing a direct comparison side-by-side. You’ll note the Atomic filament is definitely more glossy in appearance both before as well as after printing. Both products printed beautifully, and the resulting bottle openers look great. I’ve found this model to be tough to really “get right” – as there are lots of tight little spots, often resulting in minor gaps/under-extrusion. Both products had similar issues in the same exact spots, though neither was terrible.

One bit worth noting – the Bambu Lab filament is MUCH more finicky in terms of releasing from the plate. If you’re not careful and don’t wait until the plate cools to at least 40C before you start trying to remove the print, you’ve got a good chance of warping your print or making a bubble of sorts when you pull it off. Notably, the Bambu stuff almost always shows a residue on the bottom of the print (it’s a bit more visible toward the top, by the hook on the back). It comes right off with the application of some heat from a heat gun or a second or two from a flame. I’ve got one of those groovy Hacksmith Lightsaber torches, so I use that. Yes, it’s super badass. Yes, I make dorky lightsaber noises while I’ve got it ignited.

Conclusion

While the Atomic filament looks excellent and also prints beautifully, so does the Bambu filament. Between the $15 premium for the Atomic product and my need to respool it (you may not, depending on how you plan to house the filament), I think my next PETG-CF buy will go back to Bambu Lab. I might try out Protopasta, but their stuff is even more expensive. So maybe not. Flashforge seems to have some PETG-CF that’s reasonably well regarded on Amazon, so there’s that as well.

Still Here?

Have fun watching some timelapses that Octoprint made of each print. Good luck telling which is which without reading the captions…

You must be logged in to post a comment.